Small lab network setup: Ubiquiti and D-Link Covr

SmartShepherd is preparing to head into a new office. Sort of. We are shifting back out of my house and moving into a shed. I'm excited about getting the unholy mess upstairs into a decent workshop environment, it's cramped upstairs with two people working and the workbenches are just too small to be productive when doing any kind of assembly or repair work.

The shed itself already has fibre to the premises thanks to the NBN network rollout here in Armidale. I didn't want to set up anything too complicated or expensive since our needs are relatively modest, but there were a few features I wanted to have:

- The ability to do VLANs easily.

- A solid firewall

- Some traffic shaping

- The ability to do small scale self hosting

- Mesh wifi

- A guest wifi network completely firewalled out of the office/lab network

That's not one box, that's three

I quickly realised that nothing on the market would cover all the possibilities. I was going to need a good router, a mesh network, and a server.

So, something better than a single router/wifi hotspot that you would typically buy for an NBN setup. I dug through the Cisco catalog for a while but in the end, Kogan advertised a 50% off offer on the Ubiquiti 10x router that ticked all the boxes except wifi. For that I headed to a local retailer. In our house we have one of the original Google Wifi setups which they have confusingly half-renamed "nest" but the newer versions of that looked fairly expensive and Google have merged the routing functions into the main mesh pod. I wanted the flexibility of being able to run the office Mesh wifi in bridge mode, which the Google setup possibly does. However, I hadn't done a lot of research and standing in front of the displays trying to work out whether the newer Google gear did bridge mode was too hard. The boxes of D-Link Covr were also 3/5ths of the price of the Google devices, advertised bridge mode extensively, so I bought that instead.

Blade server is not what you want

I am a big fan of HP Enterprise servers. Easy to work on, cheap to get parts for on the second hand market, well supported by Linux and currently being retired in their thousands by small-medium businesses who are moving everything to the cloud. My main workstation is a re-purposed HPE tower (a Proliant ML330 G6) which is ancient but has been hot-rodded with 96Gb of memory and the two fastest 6 core Xeons supported by the bios. Debian reports it has having 24 total processors so it's perfect for running several containers and VMs at near metal speed. I wanted another one but since it isn't going to be doing double duty as a workstation, a rack server would work.

Late at night on Ebay, I was skimming through the specs of any HP equipment and came across an incredibly cheap listing for something that had dual 3.0Ghz Xeons, 72Gb of memory and an SSD boot disk. I couldn't buy the processors and ram for the price of this thing but it's a whole server! Boom, a button press later and it's on the way. A HP Proliant BL460c G6. In the morning when I did the research I should have done before buying it, it was clear that the blades by themselves are completely useless as they need to be put in a chassis. Some brave souls have attached a 12V power supply to them directly and used the front port to host a USB ethernet connection but forget that noise.

The Blade stripped bare. Unless you get one of these for a bargain price, forget using them as a server by themselves.The server has to be set up properly, not dangerously wired to something it wasn't designed for. Plus the huge advantage of running something like a Proliant is they have multiple network interfaces, so you can dedicate an entire card to a bridge, define a pile of taps and set your containers and VMs so that they appear like actual machines on the network. It's more secure and it's a tonne faster.

Techbuyer to the rescue

I wasn't completely in the hole just yet, the price of the blade was less than the price of the processors and memory. I hate to waste things but the rest of the blade is useless other than as spares, and given other people are also stripping these things for their memory and CPUs the spares are both plentiful and worthless. Off to the recycler for that part.

I contacted the people at Techbuyer (who I have bought server parts for my 330 before) and they happily sold me a bare-bones Proliant DL360 G7 rack server, which my memory and CPU would work in. The 360 has 4 high speed ethernet ports, 8 of the loudest fans in history and a single expansion slot (more on that later).

Bench testing the new lab setup. Actually, that's a kitchen table not a bench. My wife is very understandingPlan: Hit the ground running

I want to see if it all works before we head down to our shed in a few days, so the only thing to do is to test everything. The Ubiquiti router is trivial to setup, it offers a web interface as well as a CLI but the initial setup is easiest over the web. I hooked up the eth0 port to a little Sony Vaio laptop and used the wizards to do a basic configuration: eth0 is the internet connection, eth9 plugged into the Covr box. D-Link annoyingly uses a phone based app to do their setup but it's easy enough to navigate once installed. This is setup with 2 Covr points running in bridge mode. A quick test and I can see the Ubiquiti is doing the DHCP properly and my phone is on the Covr wireless network. The second point is upstairs. I have a benchtop D-Link POE switch there (the Cassia Networks X1000 we use for Bluetooth use POE), plugging the Covr into that switch and all the upstairs gear is now connected to the internet. It works!

The bit where it all comes unstuck

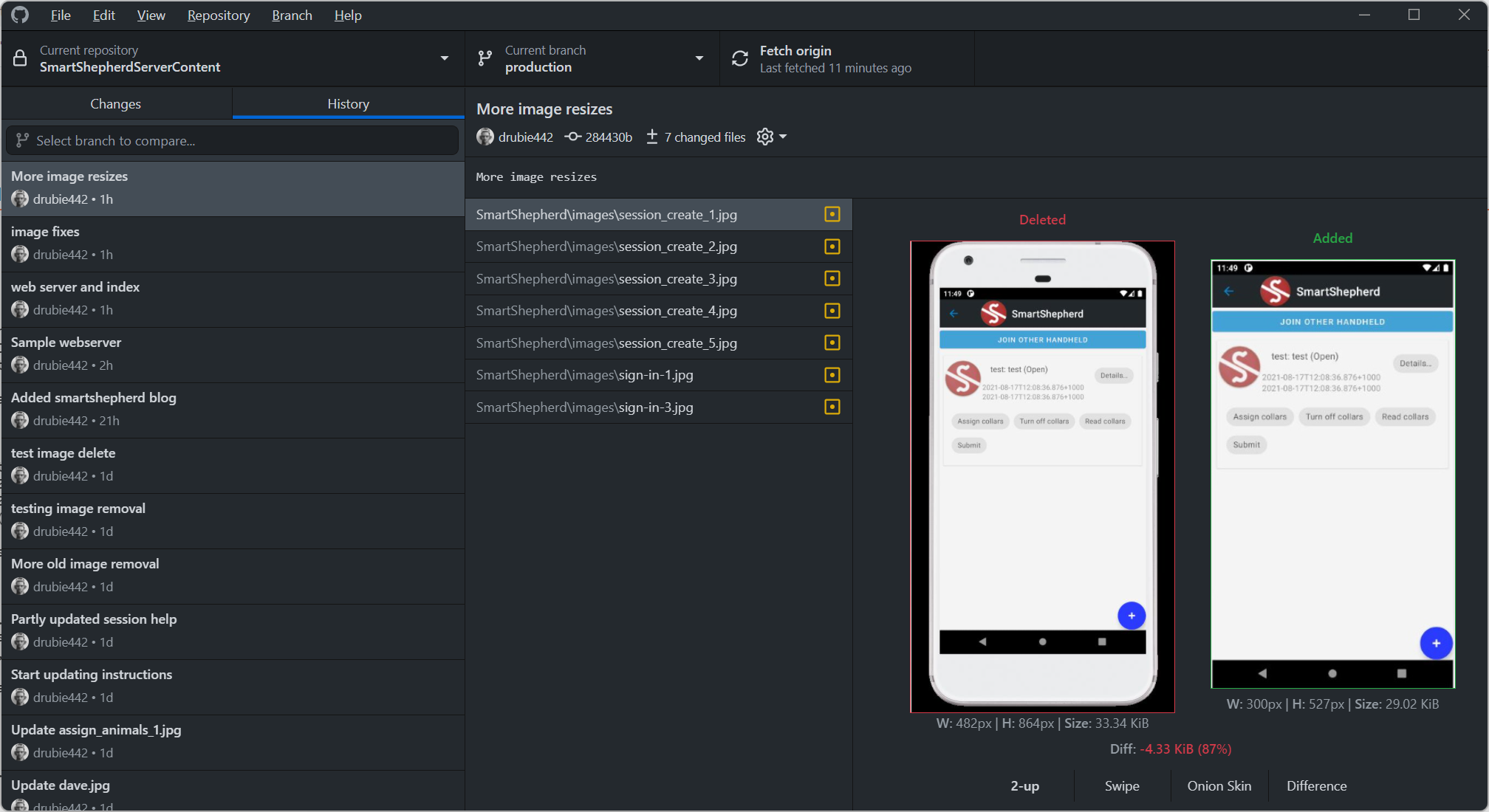

Don't be me. Do some research before you decide to make a frankenserverSo I have my mock-up network, now I need to pillage the blade and build the new rack server. Except the CPU heatsinks...don't fit. They use a different mounting system to the correct heat sinks. Techbuyer don't have any (well, they don't want to sell them without CPUs) and ebay has a single one in Australia. A pair will come from the US ($10 each I think) but shipping at the moment is hit and miss so I don't know whether I will see them tomorrow or in two months. The solution? Take a hacksaw to the ears of the blade heatsinks and cable tie them on. It is not pretty but it works. They are also too tall but as a temporary solution they work fine with a little duct tape around the cover to make sure the airflow is close to correct.

One of the functions of that server is to run a Macintosh OSX VM, which works a lot better if you can dedicate a video card to it. For those purposes, an ATI HD6570 was bought (note: these are cheap but super capable) and now nestles in the back of the rack server. Debian 11 is now installed on here and it's ready to go. The entire network (including the printer!) is working as if installed in our shed so bring on Monday!